Endemic AI problems. Cybercrime high. Shrugging is our response.

PLUS a near future court case based on new technology, a thought provoking quote and menu mistakes

For some reason today I felt frustrated with our naïve and sometime short-sighted reactions to AI, cybersecurity, privacy and other critical technological issues. There is a slight chance it may have crept into my writing.

MAIN COURSE: Shrug 1: Who cares about the problems with AI?

This week a new rigorous study came out from Apple that had some hard hitting conclusions about the limitations of LLMs / LRMs (Large Reasoning Models)1. You get a flavour of the conclusion from the title: The Illusion of Thinking.

Perhaps you read about it. No, you likely didn’t because we are immersed in a world of total AI hype and no ray of reality is allowed in. Let me highlight some of the key points for you directly from the report:

The more complex the problem the more quickly that both LLM and LRMs models give up

On simple problems LLMs are actually better than LRMs which is counterintuitive

The LRMs were guilty of “over-reasoning” on simple problems

The LRMS stopped their reasoning quickly on complex problems even when access to compute wasn’t an issue

When researchers told the AI models the solution path they couldn’t execute it

The reasoning puzzles used to test these models were widely available and likely had been ingested during training

Which basically means what we have now is what we are going to have to live with and adapt to until some architectural and structural changes are made under the covers. Inaccurate and unpredictable assistants that really cannot help with substantive thinking and reasoning except with familiar pattern recognition.

Let me repeat, without substantial changes AI models aren’t going to get significantly better. They are structurally unable to. (More about that here for those that are interested)

Yet most people treat AI as godlike magic, and don’t begin to appreciate the extent of the shortcomings. Responses I have had to some of the “AI screws up” examples I have written about over the last few months include:

Errors don’t happen that often (the error rate is actually quite high and unpredictable)

People make mistakes too (but of a different nature)

It is too late anyway or just go with the flow (defeatist)

Who cares about being perfect or accurate they make me more productive (these people deserve AI slop)

You need to get on board as this is the future (I am on board changing the way they should be utilized, personally and in business organizations)

So basically a shrug.

The very first thing for each and every output you get from an AI model is to check the results. Studies show that the majority of users are NOT doing that. Here are two examples from last week for friends using AI for the same thing: vacation planning for road trips. ChatGPT was used in both cases and surfaced some great places to stay, to eat at, and to see. When I looked at the daily travel plans in both cases the timing was far too aggressive, especially when adding in suggested side attractions.

As an example Miss Chatty said one leg could be done in 6.5 hours. Not. Even. Close. In summer on undivided roads where even 2 teenagers speeding while on speed, driving at night so there are no cars, would take 8.5 hours minimum. Neither person had checked any of this, just presuming perhaps that ChatGPT had checked Google maps. It cannot access maps nor does it have any way to validate any of its recommendation.

Mistakes all the time. Here is a personally perplexing one from Saturday using Perplexity:

My wife says, “I saw a sandwich board advertising the Bach choir. There must be a concert soon. Do you want to go?”

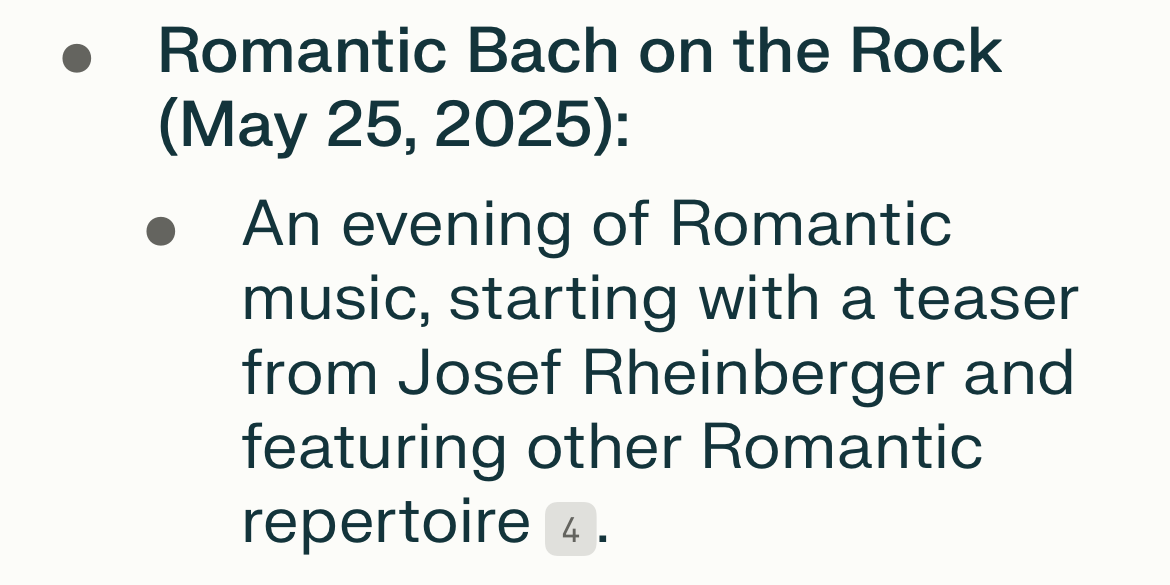

QUESTION (Prompt): “When are there performances of the Bach on the Rock choir on Salt Spring Island?“

PERPLEXITY RESPONSE below showing the last concert was in May.

CLICKED LINK (show as 4 above) to check and linked to our community bulletin board (below), showing a concert Saturday night.

So not shrugging means getting to go to a concert. Shrugging means chaos on a road trip by the second day.

SPECIAL FISH: We still can’t or won’t smell the rottenness of cybercrime

My normal Sunday reading in bed included an assessment of cybercrime and hospitals, which are hacked frequently. Why you may ask? Because they are a treasure trove of personal data - contact information, detailed health records, and payment information - which can be harvested, bundled, and sold in so many ways. Plus hospitals pay up quickly to resume operations. So attractive to criminals. As these crooks cut their way through the digital innards of hospitals they cause lots of side effects in turns of patient safety, cancelled surgeries; even deaths.

There must be a hue and cry about this, surely there are dozens of activists groups urging us on to much better cybersecurity for our most vital social institutions.

Pfft! Nowhere. The stories are seldom front page and the writing about these crimes is ill informed. There are no loud calls for accountability. But when your readers are already comfortable with years of having their data being sold in this manner by Meta, Google, Youtube and others, such things are received with a Gallic shrub, as we say, “Je ne sais pas” (For my American readers this means we didn’t vote for Trump).

Or “Surely David you must be exaggerating”. In 2024 these attacks only effected, checks the proverbial and trendy notes, 237 million individuals. That’s only two-thirds of the United States so no sweat at all.

What really made me sit up and spill my tea was this quote: “There is a state of mind that hackers are moral,” said Itay Glick, director of product at security firm OPSWAT. “We need to understand that not all the attack groups share the same ethical standards that we think they should.”

I lay that delusional attitude directly at Hollywood’s feet and their two repetitive tropes about cybercrime: genius level bad actors from nefarious foreign countries, and genius level but misunderstood teenage geniuses who are usually trying to do good. Plus all medias’s fascination with hacktivists. Sorry the operative part of this is the CRIME suffix in the word cybercrime.

What is moral about bringing a hospital to its knees?

“A few surgeries were cancelled and one guy died, but he was getting on anyway. Besides we were really protesting Big Pharma but we decided to also get a large payoff from the hospital because we felt they were greedy bastards as well. Plus we made another $25 million selling your health records which went to a very good cause” .

Said no criminal ever

There are so many different things all business organizations can do to greatly reduce these cyber intrusions from upgrading old hardware and software to better implementation of cybersecurity software. But they seem content to just make payouts from cybercrime insurance and we have already mastered the Gallic shrug.

MENU MISTAKES

So why are we going to Mars? Aaah, that’s right Jeff and Elon are rich enough already.

How would they know? If you cannot understand the basics of international trade, budgeting, and the rule of law not sure you are a reliable reporter.

QUICKBYTES: A court transcript from a few months from now

JUDGE: Begin your defence counsellor.

DEFENSE LAWYER: You heard the prosecutor infer that my client was a drug dealer. What a smear! His contention is based solely on evidence that my client’s eDNA was found in the air near the eDNA of opium and also the eDNA of convicted drug kingpin REDACTED. We will demonstrate to this court based on expert testimony from a world class meteorologist that the winds that day the samples were collected were particularly gusty. Furthermore, we will have a horticulturalist present evidence that the opium eDNA could have been from an ordinary garden poppy.

PROSECUTOR (jumping up angrily): Objection! Sidebar your honor. Recent cases have decided positively that the eDNA of opium has distinct genetic markers that differentiate it from garden poppies. Besides these eDNA samples were found in a gravel pit miles from any garden flowers.

Photo above of AirPrep™ Bobcat Air Sampler ready to find evidence to accuse you.

This little court room scenario could play out soon. The science of hoovering up the air and then identifying the detritus of all living cells found amongst all the other flotsam in the air is getting pretty good. The cells humans and all other organic matter shed into our environment when found are called, surprisingly, environmental DNA, or eDNA for short. And they can be readily identified as per this report.

Here is another privacy issue for you to worry about @neela, who recently wrote about other major technology privacy concerns.

A LITTLE SPICE

The perturbing thought is that some of the ideas we hold today — which we could defend in thoughtful, enlightened-seeming terms — will appear to our descendants as rank bigotry.

James Marriott

Thanks very much for reading. I greatly appreciate comments, reader submissions, likes, restacks and all that visible feedback. It keeps me going!

LRMs are specifically trained to solve complex, multi-step problems using structured reasoning techniques. This includes deductive, inductive, abductive, and analogical reasoning, allowing them to analyze, break down, and logically solve tasks that require more than simple pattern recognition

Every single tech revolution in history has started with a disastrously famous beginning. Thinking back to my youth as computers, then gaming systems, then cell phones hit the stage, there were always more promises than productivity. On another note, I'm eerily fascinated by the prowess of individuals that can squeeze behind doors we don't even know exist and horrified at the same time.

Thank you for the insightful information.

When productivity is your only goal, quality and even logic don't count. How long do you think this phase will last?